Mẹo How many virtual machines can a single computer on Google Cloud be divided into?

Thủ Thuật về How many virtual machines can a single computer on Google Cloud be divided into? Mới Nhất

Bùi Văn Quân đang tìm kiếm từ khóa How many virtual machines can a single computer on Google Cloud be divided into? được Update vào lúc : 2022-12-05 23:02:05 . Với phương châm chia sẻ Bí kíp Hướng dẫn trong nội dung bài viết một cách Chi Tiết 2022. Nếu sau khi tham khảo Post vẫn ko hiểu thì hoàn toàn có thể lại Comment ở cuối bài để Admin lý giải và hướng dẫn lại nha.The Google Cloud Platform offers many types of Google Compute Engine (GCE) virtual machines (VMs) in various sizes. Choosing the right VM for your workload is an important factor for a successful Tableau Server deployment. You can choose from a wide range of VMs. For a complete list of all available VM types and sizes, see the Machine Types(Link opens in a new window) page the Google website.

Nội dung chính Show- Typical VM types and sizes for development, test, and production environmentsRecommended

specifications for a single production instanceA brief history of virtualizationHow does virtualization work?Types of virtualizationData virtualizationDesktop virtualizationServer virtualizationOperating system virtualizationNetwork functions virtualizationWhy migrate your virtual infrastructure to Red Hat?What is the highest number of virtual machines a cloud service can hold?How many instances can be created in Google cloud?What is the maximum number of virtual NIC per VM in GCP?How many zones can be there in a GCP region?

It is important to select a VM that can run Tableau Server. The VM must meet the Tableau Server hardware guidelines (a minimum of 8 cores and 32 GB of RAM).

At minimum, a 64-bit Tableau Server requires a 4-core CPU (the equivalent of 8 Google Compute Engine vCPUs) and 64 GB RAM. However, a total of 8 CPU cores (16 Google Compute Engine vCPUs) and 64GB RAM are strongly recommended for a single production Google Compute Engine VM.

The Windows Operating system will recognize these 16 vCPU as 8 cores, so there is no negative licensing impact.

Typical VM types and sizes for development, test, and production environments

- n2-standard-16

Recommended specifications for a single production instance

Component/ResourceGoogle Cloud PlatformCPU

16+ vCPU

Operating System

Tableau Server 2022.3.0 and later:

Windows Server 2022

Windows Server 2022

Earlier versions:

Tableau Server 2022.1.0 - 2022.2.x:

Windows Server 2012

Windows Server 2012 R2

Windows Server 2022

Windows Server 2022

Tableau Server 2022.1.0 - 2022.x:

Windows Server 2008 R2

Windows Server 2012

Windows Server 2012 R2

Windows Server 2022

Windows Server 2022

Memory

64+ GB RAM (4GB RAM per vCPU)

Storage

Two volumes:

30-50 GiB volume for the operating system

100 GiB or larger volume for Tableau Server

Storage type

SSD persistent disk, 200GB++

For more information about SSD persistent disks, see Storage Options the Google Cloud Platform website.

Disk latency

Less than or equal to 20ms as measured by the Avg. Transfer disk/sec Performance Counter in Windows.

- Console Support

Developers Partner Connect redhat.com

Start a trial

Contact us

Welcome,

Log in to your Red Hat account

Log in

Your Red Hat account gives you access to your thành viên profile and preferences, and the following services based on your customer status:

- Customer PortalUser management

- Certification Central

Register now

Not registered yet? Here are a few reasons why you should be:

- Browse Knowledgebase articles, manage support cases and subscriptions, tải về updates, and more from one place.View users in your organization, and edit their account information, preferences, and permissions.Manage your Red Hat certifications, view exam history, and tải về certification-related logos and documents.

For your security, if you're on a public computer and have finished using your Red Hat services, please be sure to log out.

Log out

Account Log in

Overview

Virtualization is technology that lets you create useful IT services using resources that are traditionally bound to hardware. It allows you to use a physical machine’s full capacity by distributing its capabilities among many users or environments.

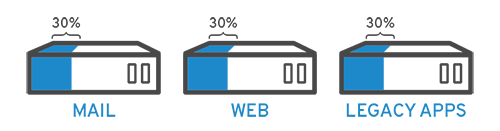

In more practical terms, imagine you have 3 physical servers with individual dedicated purposes. One is a mail server, another is a web server, and the last one runs internal legacy applications. Each server is being used about 30% capacity—just a fraction of their running potential. But since the legacy apps remain important to your internal operations, you have to keep them and the third server that hosts them, right?

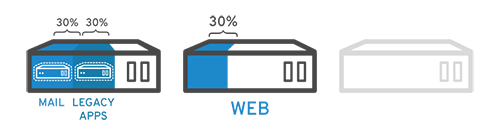

Traditionally, yes. It was often easier and more reliable to run individual tasks on individual servers: 1 server, 1 operating system, 1 task. It wasn’t easy to give 1 server multiple brains. But with virtualization, you can split the mail server into 2 unique ones that can handle independent tasks so the legacy apps can be migrated. It’s the same hardware, you’re just using more of it more efficiently.

Keeping security in mind, you could split the first server again so it could handle another task—increasing its use from 30%, to 60%, to 90%. Once you do that, the now empty servers could be reused for other tasks or retired altogether to reduce cooling and maintenance costs.

A brief history of virtualization

While virtualization technology can be sourced back to the 1960s, it wasn’t widely adopted until the early 2000s. The technologies that enabled virtualization—like hypervisors—were developed decades ago to give multiple users simultaneous access to computers that performed batch processing. Batch processing was a popular computing style in the business sector that ran routine tasks thousands of times very quickly (like payroll).

But, over the next few decades, other solutions to the many users/single machine problem grew in popularity while virtualization didn’t. One of those other solutions was time-sharing, which isolated users within operating systems—inadvertently leading to other operating systems like UNIX, which eventually gave way to Linux®. All the while, virtualization remained a largely unadopted, niche technology.

Fast forward to the the 1990s. Most enterprises had physical servers and single-vendor IT stacks, which didn’t allow legacy apps to run on a different vendor’s hardware. As companies updated their IT environments with less-expensive commodity servers, operating systems, and applications from a variety of vendors, they were bound to underused physical hardware—each server could only run 1 vendor-specific task.

This is where virtualization really took off. It was the natural solution to 2 problems: companies could partition their servers and run legacy apps on multiple operating system types and versions. Servers started being used more efficiently (or not all), thereby reducing the costs associated with purchase, set up, cooling, and maintenance.

Virtualization’s widespread applicability helped reduce vendor lock-in and made it the foundation of cloud computing. It’s so prevalent across enterprises today that specialized virtualization management software is often needed to help keep track of it all.

How does virtualization work?

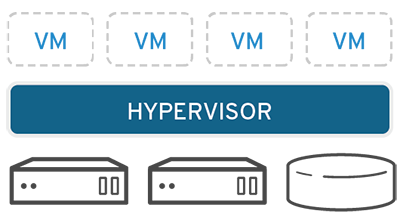

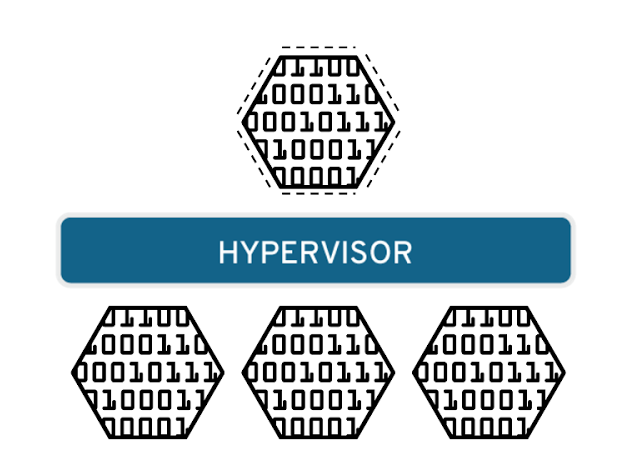

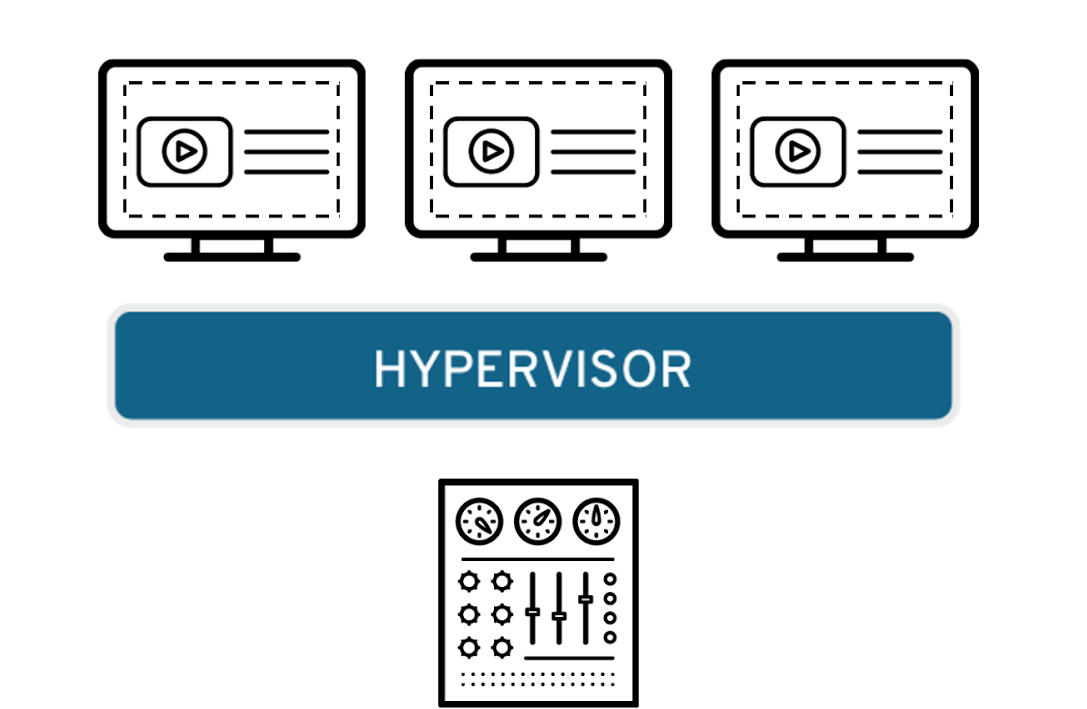

Software called hypervisors separate the physical resources from the virtual environments—the things that need those resources. Hypervisors can sit on top of an operating system (like on a máy tính) or be installed directly onto hardware (like a server), which is how most enterprises virtualize. Hypervisors take your physical resources and divide them up so that virtual environments can use them.

Resources are partitioned as needed from the physical environment to the many virtual environments. Users interact with and run computations within the virtual environment (typically called a guest machine or virtual machine). The virtual machine functions as a single data file. And like any digital file, it can be moved from one computer to another, opened in either one, and be expected to work the same.

When the virtual environment is running and a user or program issues an instruction that requires additional resources from the physical environment, the hypervisor relays the request to the physical system and caches the changes—which all happens close to native speed (particularly if the request is sent through an open source hypervisor based on KVM, the Kernel-based Virtual Machine).

Types of virtualization

Data virtualization

Data that’s spread all over can be consolidated into a single source. Data virtualization allows companies to treat data as a dynamic supply—providing processing capabilities that can bring together data from multiple sources, easily accommodate new data sources, and transform data according to user needs. Data virtualization tools sit in front of multiple data sources and allows them to be treated as single source, delivering the needed data—in the required form— the right time to any application or user.

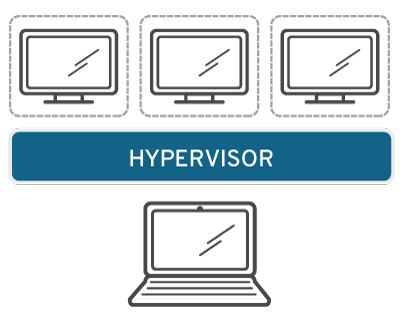

Desktop virtualization

Easily confused with operating system virtualization—which allows you to deploy multiple operating systems on a single machine—desktop virtualization allows a central administrator (or automated administration tool) to deploy simulated desktop environments to hundreds of physical machines once. Unlike traditional desktop environments that are physically installed, configured, and updated on each machine, desktop virtualization allows admins to perform mass configurations, updates, and security checks on all virtual desktops.

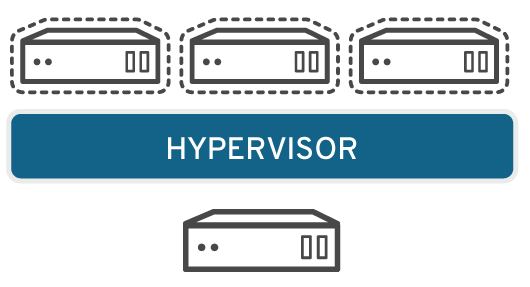

Server virtualization

Servers are computers designed to process a high volume of specific tasks really well so other computers—like laptops and desktops—can do a variety of other tasks. Virtualizing a server lets it to do more of those specific functions and involves partitioning it so that the components can be used to serve multiple functions.

Operating system virtualization

Operating system virtualization happens the kernel—the central task managers of operating systems. It’s a useful way to run Linux and Windows environments side-by-side. Enterprises can also push virtual operating systems to computers, which:

- Reduces bulk hardware costs, since the computers don’t require such high out-of-the-box capabilities.Increases security, since all virtual instances can be monitored and isolated.Limits time spent on IT services like software updates.

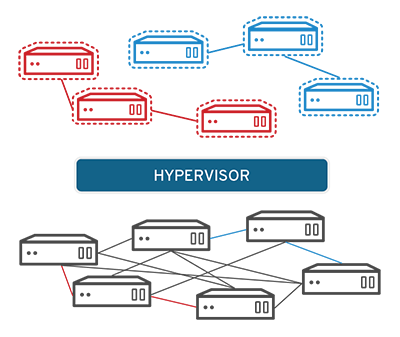

Network functions virtualization

Network functions virtualization (NFV) separates a network's key functions (like directory services, file sharing, and IP configuration) so they can be distributed among environments. Once software functions are independent of the physical machines they once lived on, specific functions can be packaged together into a new network and assigned to an environment. Virtualizing networks reduces the number of physical components—like switches, routers, servers, cables, and hubs—that are needed to create multiple, independent networks, and it’s particularly popular in the telecommunications industry.

Why migrate your virtual infrastructure to Red Hat?

[embed]https://www.youtube.com/watch?v=wtBY4Dhe8d4[/embed]Video of Red Hat: Open your possibilities

Because a decision like this isn’t just about infrastructure. It’s about what your infrastructure can (or can’t) do to support the technologies that depend on it. Being contractually bound to an increasingly expensive vendor limits your ability to invest in modern technologies like clouds, containers, and automation systems.

But our open source virtualization technologies aren’t tied to increasingly expensive enterprise-license agreements, and we give everyone full access to the same source code trusted by more than 90% of Fortune 500 companies.* So there’s nothing keeping you from going Agile, deploying a hybrid cloud, or experimenting with automation technologies.

*Red Hat client data and Fortune 500 list, June 2022

What is the highest number of virtual machines a cloud service can hold?

The maximum number of virtual machines a cloud service can contain is 50. Explanation: A virtual machine can be understood as a guest. It is created in a computing environment and called a host.How many instances can be created in Google cloud?

By default, Cloud Run services are configured to scale out to a maximum of 100 instances. You can change the maximum instances setting using the Google Cloud console, the gcloud command line, or a YAML file when you create a new service or deploy a new revision.What is the maximum number of virtual NIC per VM in GCP?

The number of virtual network interfaces scales with the number of vCPUs with a minimum of 2 and maximum of 8.How many zones can be there in a GCP region?

A region is a specific geographical location where you can host your resources. Regions have three or more zones. Tải thêm tài liệu liên quan đến nội dung bài viết How many virtual machines can a single computer on Google Cloud be divided into? Công Nghệ Google Balanced persistent disk Google Cloud Google Compute Engine Gcp static IP Google Cloud Storage Gcp change hostname

Post a Comment